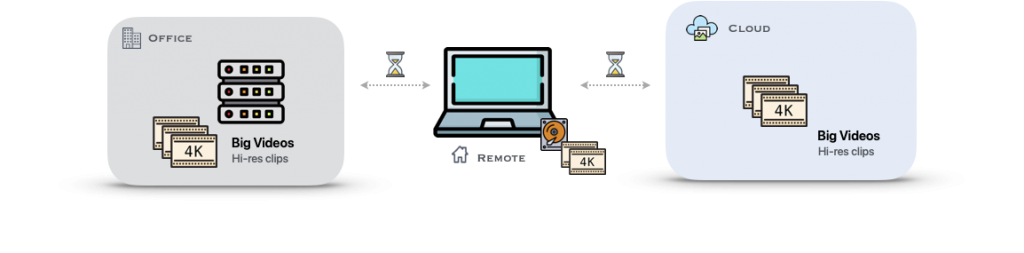

Post COVID-19 every customer that deals with large digital assets or media files has struggled with enabling remote access to staff. Massive files sizes, multiple on-premises storage silos, need to access from home/remote locations is the cause of a lot of IT headaches. In this article we look at the best practices in avoiding the common pitfalls when architecting remote workflows.

Challenges

There are two key challenges to overcome with large media files:

- Remote locations needs to transfer large videos between office, cloud, portable drives with limited bandwidth & high-latency WAN connections that are the norm on the public Internet

- Transfer times can be slow for large video files on public Internet, office VPNs over any protocol be it SMB, custom network file shares etc as they can’t defy the laws of physics w.r.t the packet latencies

COVID-19 has upended remote workflows with large videos. Stay-at-home editors & creatives need faster network transfer times, color accuracy, access to high resolution assets to conform.

Facts

- Creatives/Editors can’t go to office to access high-performance edit-stations or big videos

- 4K & other high-res media at office SAN/NAS is connected via 10Gbit Ethernet or Fiber Channel to on-premises edit-stations

- Home office download speed < 150 Mbps; upload < 50 Mbps are typical

- VPN access servers weren’t designed with peak load aka all staff working remotely

- Color accuracy, high-res audio/video streams are difficult with Windows RDP/Apple Screen Sharing to office computer via VPN

Myth

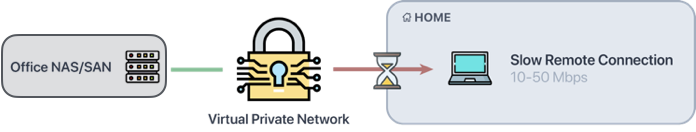

- IT thinks provisioning VPN is enough to get creatives to work from home

- Editors are going back to office soon, can just wait it out

- Mounting network shares via VPN is enough to copy high-res media from office shared storages

- Migrating to cloud high-res media will take months if not weeks

- If only we could mount cloud storage like a shared file system all problems will be solved

Impact of VPN bandwidth on media transfer

Business as usual with VPN doesn’t scale for stay-at-home editors because:

- Corporate bandwidth available via VPN is often fraction of what’s available through a cloud connection from home

- With only 50Mbps VPN, to transfer a 1 hour RED 4K card to office from home could take 6+ days

- To transfer a 1 hour RED 4K card from office to home could take 6+ days, with 5 Mbps VPN upload bandwidth

- Without reliable transfer capabilities like auto-resume, interrupted transfers could take even longer

- Many companies simply try to cope via sneaker net, sending USB drives around or mounting shared file system over VPN

Solutions that don’t work

Now that we have looked at the challenges with transferring media file some the WAN (Internet), let’s take a look at simplistic solutions that don’t work and why:

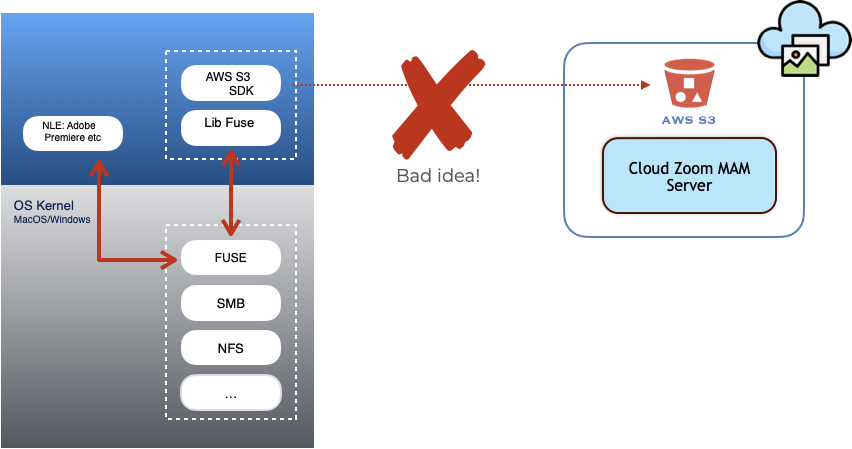

AWS S3/Cloud Object Storage based File System Driver

Tempting as it is, to abstract the Cloud object storage (S3, Azure blob etc) to a mounted file system on a Remote Desktop such as a MacOS, run 100 miles away from it.

There are number of S3 or Cloud Drive solutions around including free/open source software that can easily expose an S3 (or other cloud object storage) buckets in the cloud and connect it to your Mac or Windows edit station similar to a DropBox. These are generic drive solutions that can be setup with any MAM including Evolphin Zoom in the cloud. They all rely on a technology called, “FUSE” or equivalent that has a kernel extension and user space driver that talks via an SDK such as AWS S3 SDK to the cloud object storage. Here is a brief list of issues with such an approach:

- System crashes: For any production workload involving especially large media files, these kernel drivers interacting with a process outside the Kernel can cause random crashes. For instance see this tech note for Google Drive crashing macOS Catalina. Just because it worked in a demo or a brief POC, is no guarantee that these crashes will not happen in production due to myriad of environmental factors such as new OS releases, kernel security upgrades etc. The SDK have bugs, when couple with FUSE like file system, an API hangs in the S3 SDK on your desktop can crash your entire OS. For instance, Evolphin recently found a serious download API bug in the AWS S3 API that would cause large download to hang, with our S3 hub it was easy to restart the hub to get around, but with a file system drive it would require a system reboot!

- Lag, Dropped Frames: Cloud object storage such as S3 have 100s of milliseconds of latency for API call. Unlike a normal on-premises network storage, object storage is designed with an unlimited storage abstraction but with high access latency. Scrubbing video frames require a very low latency video frame delivery to the NLE, even with a low-res proxy. Since the latency in S3 or object storage can’t be guaranteed, sometimes these can get as large as 10s of seconds, causing unpleasant edit experience with lag, stutter and dropped frames. Often in a demo scenario, the vendor may have had the entire file cached on the desktop to negate the effect of packet latencies and therefore will not simulate real world network scenarios.

- Egress cost: Streaming or downloading from an S3 bucket every time you need to access the file will run you a large EGRESS bill with the cloud provider. To avoid that, these file system often cache the entire file on your disk, and then defeats the purpose of streaming via the S3 file driver in the first place!

- Security: When opening your cloud object storage all devices around the world, you need to worry about access control as well as encryption of the streams going over the WAN. Gold standard on the Internet for encrytping remote connection is TLS/SSL. If this is used with these cloud file system drivers, it slows down the transfers even more. Often in simple demos or proof of concept security settings might be off to make the S3 drive look better, but you will never deploy insecure HTTP connections to S3 in production

- File Corruption risk: File system drivers are finicky, as they are often stabilized over decades by the file system provider for stability and data consistency. Due to the inherent caching done by these remote file system drivers, file metadata updates such as rename, moves or even modifications can run into what is called a “write-consistency’ issue. In simple speak that means even if you think you have saved a file, the cloud storage might not have committed your file changes causing data loss or file corruption. In a collaborative scenario with multiple editors, this becomes even more severe. AWS S3 for example, doesn’t make any guarantees on when updated will complete to a file, and you will see larger inconsistent windows when S3 is under heavy load or having operational issues.

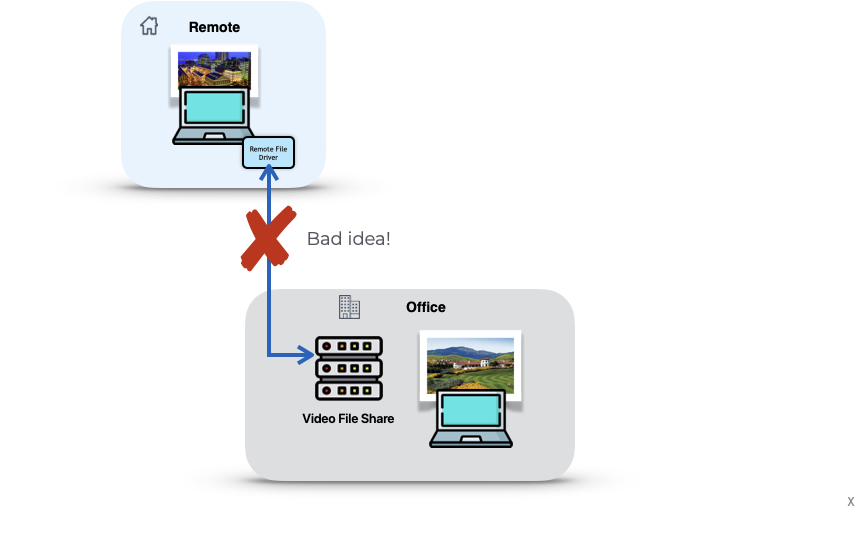

Network File Shares Accessed Remotely

If you have shared storages at office with terabytes of data including high-res videos, it is tempting to expose a hole in your corporate firewall and allow editors to access the office file system remotely using file system driver over the Internet/WAN. These run into similar obstacles as the S3.

Similar to the S3 file drivers, there are number of vendors and open source solutions that purport to provide easy file system access to your offie storage. These can technically be setup with any MAM, but run into the following problems:

- VPN Bottleneck: In order to securely punch a hole into your office firewalls a VPN is the well established means to creating a secure network tunnel for your remote staff. VPN servers are notoriously slow as they are a single point of contention with entire office staff unrelated to media production accessing office systems. Any file sharing protocol be it SMB, NFS, AFP or a custom protocol will run into the VPN brick wall. To mitigate that, vendors might suggest opening a direct port to the file share without a VPN. That will not pass any security audit. One of our broadcast customer tried that with their older MAM prior to switching to Evolphin Zoom and had a ransomware attack on the storage crippling the entire office.

- Lag, Dropped Frames: Scrubbing video frames require a very low latency video frame delivery to the NLE, even with a low-res proxy. Since the latency on the Internet/WAN can’t be guaranteed, sometimes these can get into seconds, causing unpleasant edit experience with lag, stutter and dropped frames. Often in a demo scenario, the vendor may have had the entire file cached on the desktop to negate the effect of packet latencies and therefore will not simulate real world network scenarios.

- File Corruption risk: File system drivers are finicky, as they are often stabilized over decades by the file system provider for stability and data consistency. Due to the inherent caching done by these remote file system drivers, file metadata updates such as rename, moves or even modifications can run into what is called a “write-consistency’ issue. In simple speak that means even if you think you have saved a file, the office storage system might not have committed your file changes causing data loss or file corruption. In a collaborative scenario with multiple editors, this becomes even more severe.

What’s the solution?

If the above approaches don’t work, what are the options? Please check this video presentation that describes the feasible solutions.